Bio

I am a PhD student at Gatsby Computational Neuroscience Unit UCL, advised by Peter Latham and Andrew Saxe.

I am currently visiting Jay McClelland and Andrew Lampinen at Stanford University, supported by a Bogue fellowship.

I use theoretical approaches to study how neural networks with different architectures [1] learn, including attention-based [2], fully-connected [3], and multimodal [4] networks.

Paper

| [1] |

|

Saddle-to-Saddle Dynamics Explains A Simplicity Bias Across Neural Network Architectures

Yedi Zhang, Andrew Saxe, Peter E. Latham

ICLR 2026

webpage | arxiv

|

| [2] |

|

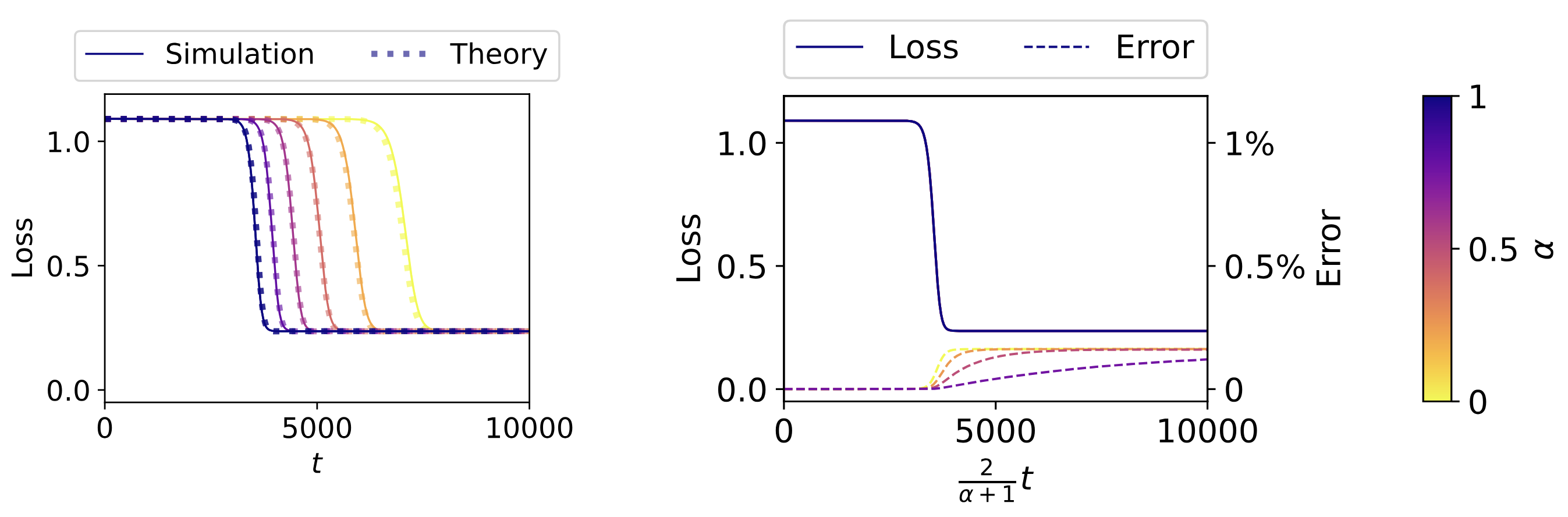

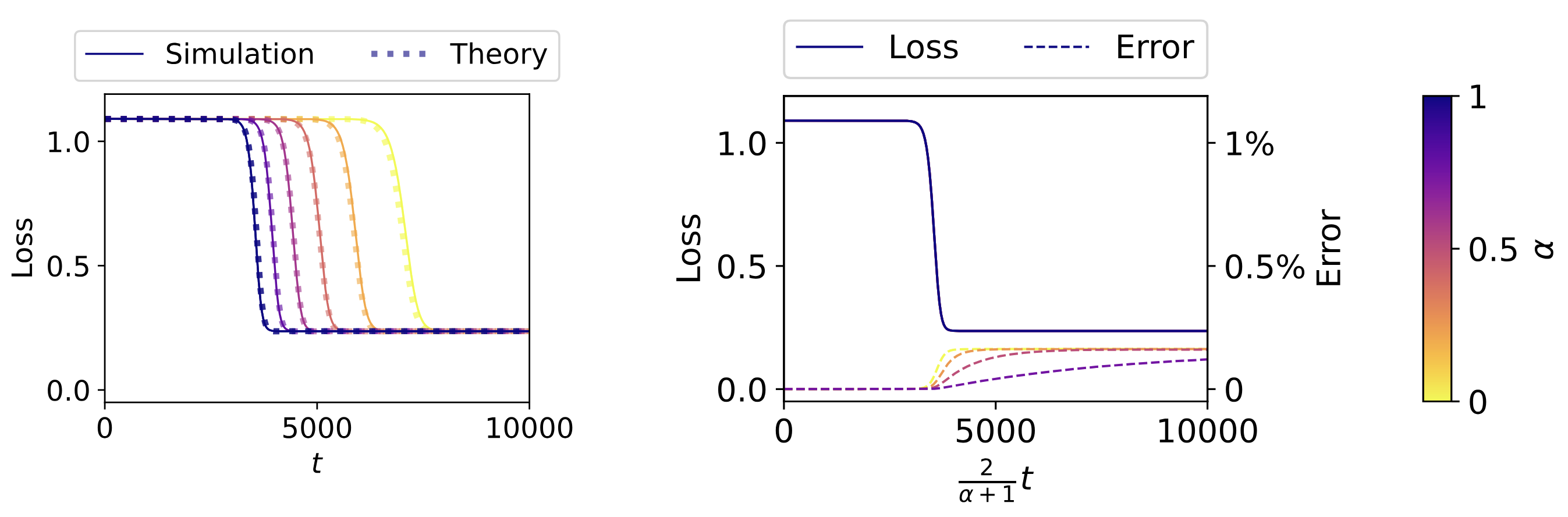

Training Dynamics of In-Context Learning in Linear Attention

Yedi Zhang, Aaditya K. Singh, Peter E. Latham*, Andrew Saxe*

ICML 2025 (Spotlight)

pmlr | openreview | arxiv | code |

talk

|

| [3] |

|

When Are Bias-Free ReLU Networks Effectively Linear Networks?

Yedi Zhang, Andrew Saxe, Peter E. Latham

TMLR 2025

tmlr | arxiv

|

| [4] |

|

Understanding Unimodal Bias in Multimodal Deep Linear Networks

Yedi Zhang, Peter E. Latham, Andrew Saxe

ICML 2024

webpage | pmlr | arxiv | code

|

Blog

2025-10-10

Exponential Family (teaching notes)

2024-09-17

Eigenvalue Perturbation Theorem

2024-04-16

Isserlis' Theorem

2024-01-21

My Cribsheet for Dynamical Systems

2023-04-06

Free Energy and EM Algorithm

2023-03-18

A Manual for Reading Independence

Fun

2025-12-29

Shows I Caught

2025-12-24

Theatre Ticket Deals in London

|