My Cribsheet for Dynamical System

Table of Contents1 Ordinary Differential Equation (ODE)1.1 First-Order Linear ODEFirst-Order Linear Homogeneous ODEFirst-Order Linear Non-homogeneous ODE1.2 Second-Order Linear ODE with Constant Coefficients1.3 First-Order Linear Differential Equation System1.4 Higher-Order Linear ODE with Constant Coefficients2 Stability Analysis2.1 Fixed Point2.2 Stability Analysis of Linear Dynamical SystemThe 2D Case2.3 Stability Analysis of Nonlinear Dynamical System3 From A Signal Processing PerspectiveGreen FunctionProof IProof IISolving First-Order Linear ODE with Green FunctionDefinitionsSolutions

The most general (and maybe most important) fact I know about differential equations is that: we generally do not know how to solve them. There are some very simple or very luckily pretty equations that people have systematically solved. For the rest of infinitely many unsolvable equations, one can only analyze them if not devoted to a career of solving them.

1 Ordinary Differential Equation (ODE)

The term "ordinary" is used in contrast with partial differential equations (PDE) which may be with respect to more than one independent variable.

There are several properties that we use to categorize ODEs.

Order: a

Linear: the ODE is linear with respect to the unknown

Homogenous: homogeneous is a prefix used on top of linear ODEs, meaning all terms in the linear ODE are either

1.1 First-Order Linear ODE

First-Order Linear Homogeneous ODE

A general first-order linear homogeneous ODE can be written as

The solution is straight-forward. We separate variables and integrate both sides:

First-Order Linear Non-homogeneous ODE

A general first-order linear non-homogeneous ODE can be written as

Its solution is

The first component of the solution is the same as the solution to the homogeneous ODE (the solution when

1.2 Second-Order Linear ODE with Constant Coefficients

For second-order linear ODEs, we generally do not have solutions if the coefficients are functions of

Second-Order Linear Homogeneous ODE with Constant Coefficients

If

We write down the associated characteristic equation

For different cases where the characteristic equation has different roots, we have the following solutions to the ODE:

Roots of Solution of

Second-Order Linear Non-homogeneous ODE with Constant Coefficients

When

One simple form

The total solution of the non-homogeneous ODE is the sum of the homogeneous solution and the particular solution. The particular solution is

Whether the coefficient coincides with the roots Particular solution The particular solution when

Whether the coefficient coincides with the roots Particular solution

1.3 First-Order Linear Differential Equation System

A first-order linear differential equation system can be written as

where the unknown

First-Order Homogeneous Linear Differential Equation System

If

Its solution is

where

If you can pretend everything is scalar for one second, the solution looks the same as the first-order linear ODE that we solved in the very beginning --- thanks to the matrix exponential

You can use this definition to prove that the matrix exponential is indeed the solution to our differential equation. But if you are only using it to solve these differential equations, don't get involved in calculating the power series; just calculate the eigenvalues to write out the solution.

First-Order Non-homogeneous Linear Differential Equation System

If

Again, this looks the same as the first-order linear non-homogeneous ODE.

1.4 Higher-Order Linear ODE with Constant Coefficients

Apologies for dragging in another notation for derivatives:

.

A univariate linear ODE of order

is equivalent to the following first-order

Solve this linear differential equation system. The solution to the original

2 Stability Analysis

When a differential equation does not have analytical solutions, we turn to qualitative tools to analyze certain properties of our interest. An important property we are usually interested in is stability --- stability of the solution under small perturbations.

This line of tools were first studied by Lyapunov.

2.1 Fixed Point

The general notion of stability is defined on the solution to a differential equation. The solution

so long as the initialization is close

This is quite hard.

More often, we talk about the stability of a fixed point, that is a constant solution. Fixed points of the differential equation

Visually, you can also look at the time-course trajectories of a dynamical system and roughly tell a fixed point is a stable fixed point if all trajectories converge to that point. Here is a schematic visualization of 4 of the most common kinds of fixed points stolen from Wikipedia.

2.2 Stability Analysis of Linear Dynamical System

For linear dynamical systems, we often talk about whether the zero fixed point

It is obvious that

We know that the solution to this dynamical system is

If all eigenvalues are negative, we can see the system will always converge to zero

The 2D Case

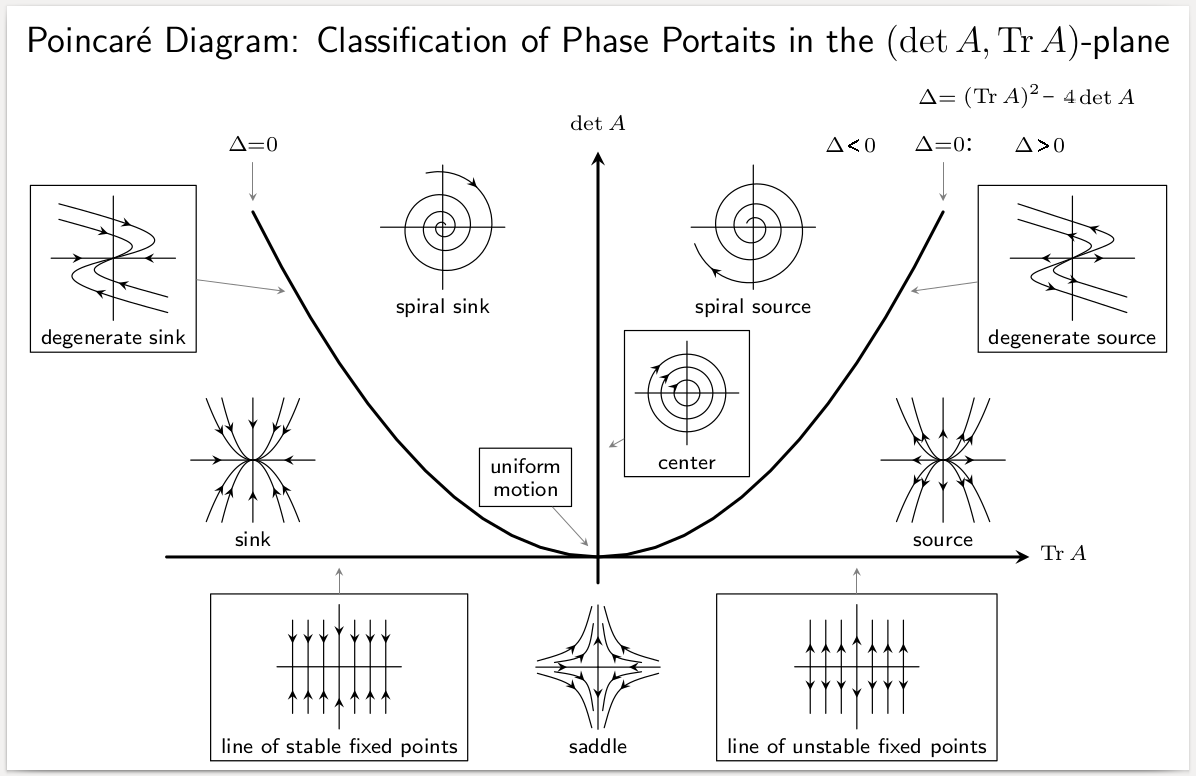

This picture comes up a lot in anything that relates to dynamical systems. But looking at it makes me a bit uneasy. Both its merit and sin are that it contains a lot of information.

This picture is about judging which type the zero fixed point is by looking at the determinant and trace of matrix

The underlying principle is the same: the zero fixed point is stable if and only if all eigenvalues have negative real parts. For 2D systems, this can be done by looking at the determinant and trace, because the determinant equals the product of all eigenvalues and the trace equals the sum of all eigenvalues. For 2D systems, we have

Equivalently, we have

2.3 Stability Analysis of Nonlinear Dynamical System

There is not much to add here. For nonlinear dynamical systems, we linearize the dynamics and treat them with linear stability analysis.

"Linearize" means first-order Taylor expansion. This is a legit simplification because stability is a property about how small perturbations influence the system ---- first-order Taylor expansion is usually accurate enough around small perturbations.

Let's be more specific. Consider nonlinear dynamics:

Assume

We use

Since the dynamics around

3 From A Signal Processing Perspective

Signal processing provides a very nice perspective on dynamical systems, especially linear dynamical systems. There are many topics that become simpler or easier to interpret in the signal processing context. Now I have only (haphazardly) written the Green function topic. Hopefully, I will add to this section.

Green Function

Green function is the impulse response of a nonhomogeneous linear differential operator with specified initial or boundary conditions. If knowing the Green function, the convolution between the Green function and the nonhomogeneous term gives us a particular solution to the nonhomogenouse ODE.

Proof I

Green Function is usually a late chapter in a differential equation textbook. But the impulse response is often the first chapter in a signal and system textbook. This is probably because its proof looks quite simple there.

In the signal and system context, the system is not necessarily defined by differential equations. It can be any linear time-invariant (LTI) systems.

Let us assume the impulse response of a LTI system is

Since the system is time-invariant, we can shift the time by

Since the system is linear, we can scale both sides by

We then integrate both sides and finishes the proof

The lefthand side is

Proof II

Green function is also the inverse Laplace transform of the inverse of the system's frequency response. Say we have a linear differential operator

We can do Laplace transform on both sides and look at the equation in the frequency domain:

Here

Now we are to find the function that gives us

We do Laplace transform on both sides and get

We can solve the Laplace transform of

Through the Laplace transform procedure, we see that the frequency domain perspective is very useful for solving ODEs because invert a differential operator in the frequency domain is just division.

Solving First-Order Linear ODE with Green Function

Consider a first-order linear non-homogeneous ODE:

Definitions

Homogeneous solution: solution to

The homogeneous solution is unique when an initial condition is given.

Particular solution: solutions to

The particular solution is not unique (providing initial conditions won't help,

Green function: solution to

The Green function is unique subject to the initial condition. Note that the Green function often resembles the homogeneous solution, because they should behave the same except at

Solutions

Sum of Homogeneous and Particular Solution

The solution of a linear non-homogeneous ODE is the sum of its homogeneous and particular solution. To make the solution unique, suppose we know the initial condition

Convolution of Green Function and Non-homogeneous Input

Convolution of the Green function and non-homogeneous input gives us a particular solution to the non-homogeneouse ODE.

For zero initial condition

Remark: Since I am a little paranoid about writing the complete solution as a convolution, I find that this is possible but not pretty. The complete solution is